In this article, I talk about how to build a system like Twitter. I focus on the problems that come up when very famous people, like Elon Musk, tweet and many people see it at once. I’ll share the basic steps, common issues, and how to keep everything running smoothly. My goal is to give you a simple guide on how to make and run such a system.

System Requirements

Functional Requirements:

- User Management: Includes registration, login, and profile management.

- Tweeting: Enables users to broadcast short messages.

- Retweeting: This lets users share others’ content.

- Timeline: Showcases tweets from the user and those they follow.

Non-functional Requirements:

- Scalability: Must accommodate millions of users.

- Availability: High uptime is the goal, achieved through multi-regional deployments.

- Latency: Prioritizes real-time data retrieval and instantaneous content updates.

- Security: Ensures protection against unauthorized breaches and data attacks.

Architecture Overview

Scalability

- Load Balancer: Directs traffic to multiple servers to balance the load.

- Microservices: Functional divisions ensure scalability without interference.

- Auto Scaling: Adjusts resources based on the current demand.

High Availability

- Multi-Region Deployment: Geographic redundancy ensures uptime.

- Data Replication: Databases like DynamoDB replicate data across different locations.

- CDN: Content Delivery Networks ensure swift asset delivery, minimizing latency.

Security

- Authentication: OAuth 2.0 for stringent user validation.

- Authorization: Role-Based Access Control (RBAC) defines user permissions.

- Encryption: SSL/TLS for data during transit; AWS KMS for data at rest.

- DDoS Protection: AWS Shield protects against volumetric attacks.

Data Design (NoSQL, e.g., DynamoDB)

User Table

Tweets Table

Timeline Table

Multimedia Content Storage (Blob Storage)

In the multimedia age, platforms akin to Twitter necessitate a system adept at managing images, GIFs, and videos. Blob storage, tailored for unstructured data, is ideal for efficiently storing and retrieving multimedia content, ensuring scalable, secure, and prompt access.

Backup Databases

In the dynamic world of microblogging, maintaining data integrity is imperative. Backup databases offer redundant data copies, shielding against losses from hardware mishaps, software anomalies, or malicious intents. Strategically positioned backup databases bolster quick recovery, promoting high availability.

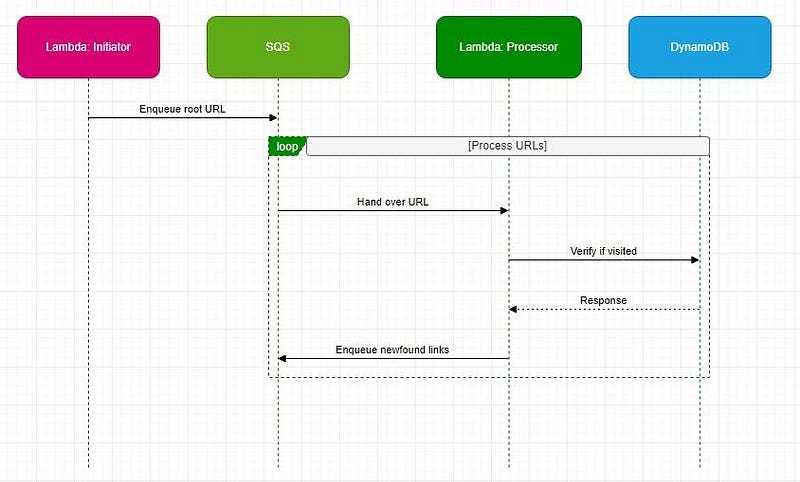

Queue Service

The real-time interaction essence of platforms like Twitter underscores the importance of the Queue Service. This service is indispensable when managing asynchronous tasks and coping with sudden traffic influxes, especially with high-profile tweets. This queuing system:

- Handles requests in an orderly fashion, preventing server inundations.

- Decouples system components, safeguarding against cascading failures.

- Preserves system responsiveness during high-traffic episodes.

Workflow Design

Standard Workflow

- Tweeting: User submits a tweet → Handled by the Tweet Microservice → Authentication & Authorization → Stored in the database → Updated on the user’s timeline and followers’ timelines.

- Retweeting: User shares another’s tweet → Retweet Microservice handles the action → Authentication & Authorization → The retweet is stored and updated on timelines.

- Timeline Management: A user’s timeline combines tweets, retweets, and tweets from users they follow. Caching mechanisms like Redis can enhance timeline retrieval speed for frequently accessed ones.

Enhanced Workflow Design

Tweeting by High-Profile Users (high retrieval rate):

- Tweet Submission: Elon Musk (or any high-profile user) submits a tweet.

- Tweet Microservice Handling: The tweet is directed to the Tweet Microservice via the Load Balancer. Authentication and Authorization checks are executed.

- Database Update: Once approved, the tweet is stored in the Tweets Table.

- Deferred Update for Followers: High-profile tweets can be efficiently disseminated without overloading the system using a publish/subscribe (Pub/Sub) mechanism.

- Caching: Popular tweets, due to their high retrieval rate, benefit from caching mechanisms and CDN deployments.

- Notifications: A selective notification system prioritizes active or frequent interaction followers for immediate notifications.

- Monitoring and Auto-scaling: Resources are adjusted based on real-time monitoring to handle activity surges post high-profile tweets.

Advanced Features and Considerations

Though the bedrock components of a Twitter-esque system are pivotal, integrating advanced features can significantly boost user experience and overall performance.

Trending Topics and Analytics

A hallmark of platforms like Twitter is real-time trend spotting. An ever-watchful service can analyze tweets for patterns, hashtags, or mentions, displaying live trends. Combined with analytics, this offers insights into user patterns and preferences, peak tweeting times, and favoured content.

Direct Messaging

Given the inherently public nature of tweets, a direct messaging system serves as a private communication channel. This feature necessitates additional storage, retrieval mechanisms, and advanced encryption measures to preserve the sanctity of private interactions.

Push Notifications

To foster user engagement, real-time push notifications can be implemented. These alerts can inform users about new tweets, direct messages, mentions, or other salient account activities, ensuring the user stays connected and engaged.

Search Functionality

With the exponential growth in tweets and users, a sophisticated search mechanism becomes indispensable. An advanced search service, backed by technologies like ElasticSearch, can render the task of content discovery effortless and precise.

Monetization Strategies

Integrating monetisation mechanisms is paramount to ensure the platform’s sustainability and profitability. This includes display advertisements, promoted tweets, business collaborations, and more. However, striking a balance is crucial, ensuring these monetization strategies don’t intrude on the user experience.

To make a site like Twitter, you need a good system, strong safety, and features people like. Basic things like balancing traffic, organizing data, and keeping it safe are a must. But what really makes a site stand out are the new and advanced features. By thinking carefully about all these things, you can build a site that’s big and safe, but also fun and easy for people to use.

If you enjoyed reading this and would like to explore similar content, please refer to the following link:

System Design 101: Adapting & Evolving Design Patterns in Software Development

Enterprise Software Development 101: Navigating the Basics

Designing an AWS-Based Notification System

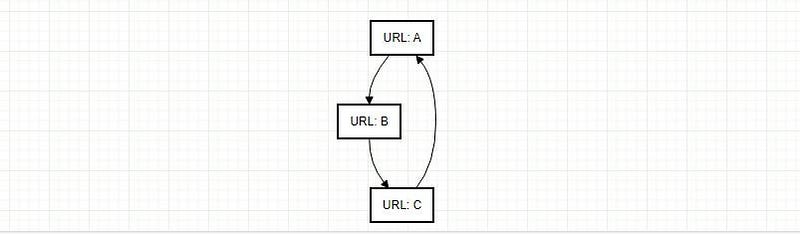

System Design Interview: Serverless Web Crawler using AWS

AWS-Based URL Shortener: Design, Logic, and Scalability

In Plain English

Thank you for being a part of our community! Before you go:

- Be sure to clap and follow the writer! 👏

- You can find even more content at PlainEnglish.io 🚀

- Sign up for our free weekly newsletter. 🗞️

- Follow us: Twitter(X), LinkedIn, YouTube, Discord.

- Check out our other platforms: Stackademic, CoFeed, Venture.